- Solutions

- Resources

- About

- Advertising

Apply this Ridiculously Effective Creative Optimization Technique to Maximize ROI

Test. Optimize. Deploy. Repeat.

This is the virtuous mantra that digital and growth marketers stand by. But how does one implement it? What are the steps? What tools are available to conduct these experiments? How do we measure the uplift in performance?

Before we get started, let’s delve into why A/B testing is necessary and how it helps in maximizing campaign performance.

Creative Optimization Framework

In every collection of creatives there will be a few that perform well and others that do not. Ensuring that users are shown the better performing creatives will better attract the intended audience for your app (and increase conversions).

For this to happen, new creatives need to be added regularly while creatives that perform below par need to be removed.

Note: A creative variation (variant) contains variations to one or more of the following elements:

- Headline

- Subheadline

- Paragraph text

- Call to Action text

- Call to Action button (size and color)

- Images

- Logos

- Inclusion or exclusion of App Store / Google Play store badges)

- Background

CTA link (landing page / deep link) is, strictly speaking, not part of the creative elements that goes into a variant, and hence not considered part of the creative optimization process.

Defining the Optimization Goal

It’s important to determine the parameters the creatives will be optimized or ranked on.

Rather than go with Click-Through Rate (CTR), or Installs per 1,000 impressions (IPM), or even Click to Install (CTI), we recommend optimizing on Actions per 1,000 impressions (APM).

For example, Lifetime Value per 1,000 impressions (LTVM) is an APM metric. This effectively means that under performing creatives are removed based on LTVM metric performance.

This is because APM goals are closer to and reflect your true business objectives. For example, if you take a CTR uplift goal, it is possible the optimization efforts takes us towards more clickbait variants that are not high “value.”

This post will describe a means to optimize creatives on the basis of APM uplift.

Brute Force Approach to Creative Optimization

The brute force approach (Buffalo theory) is extremely effective for CTR-based creative optimization. However, it is infeasible when it comes to optimization on an APM metric.

This is because the time period involved for, say, LTVM optimization is usually up to 14 days,* which makes the optimization process extremely expensive and time consuming.

* Considering users download an app up to seven days after an ad impression - the standard attribution window - and allowing another seven days for users to perform the intended post-install actions (or LTV events) to get recorded.

However, if you are indeed optimizing for CTR (for example, drive traffic campaigns) and the number of creatives to be tested is manageable, then this approach is incredibly effective.

It involves launching a campaign with all creatives simultaneously. Once all creatives get a certain minimum and largely equal exposure, the CTR is calculated for each creative.

Post that, underperforming creatives are identified and paused. For example, one could pause all creatives whose CTR is below a certain absolute threshold or a certain ratio below the best performing creative’s CTR (say, 80 percent).

The aim is to retain a certain minimum number of creatives to ensure creative diversity, and then create more variants of the best performing creative and add it to the campaign to further optimize on elements within the creative theme.

Having said that, if you are using dynamic creative optimization (DCO) tools, which effectively means having a large number of creatives to test, this approach is sub-optimal.

Note: A key assumption here in the setup described is that all creatives are intended to target the same audience and the delivery optimization event selected is the same.

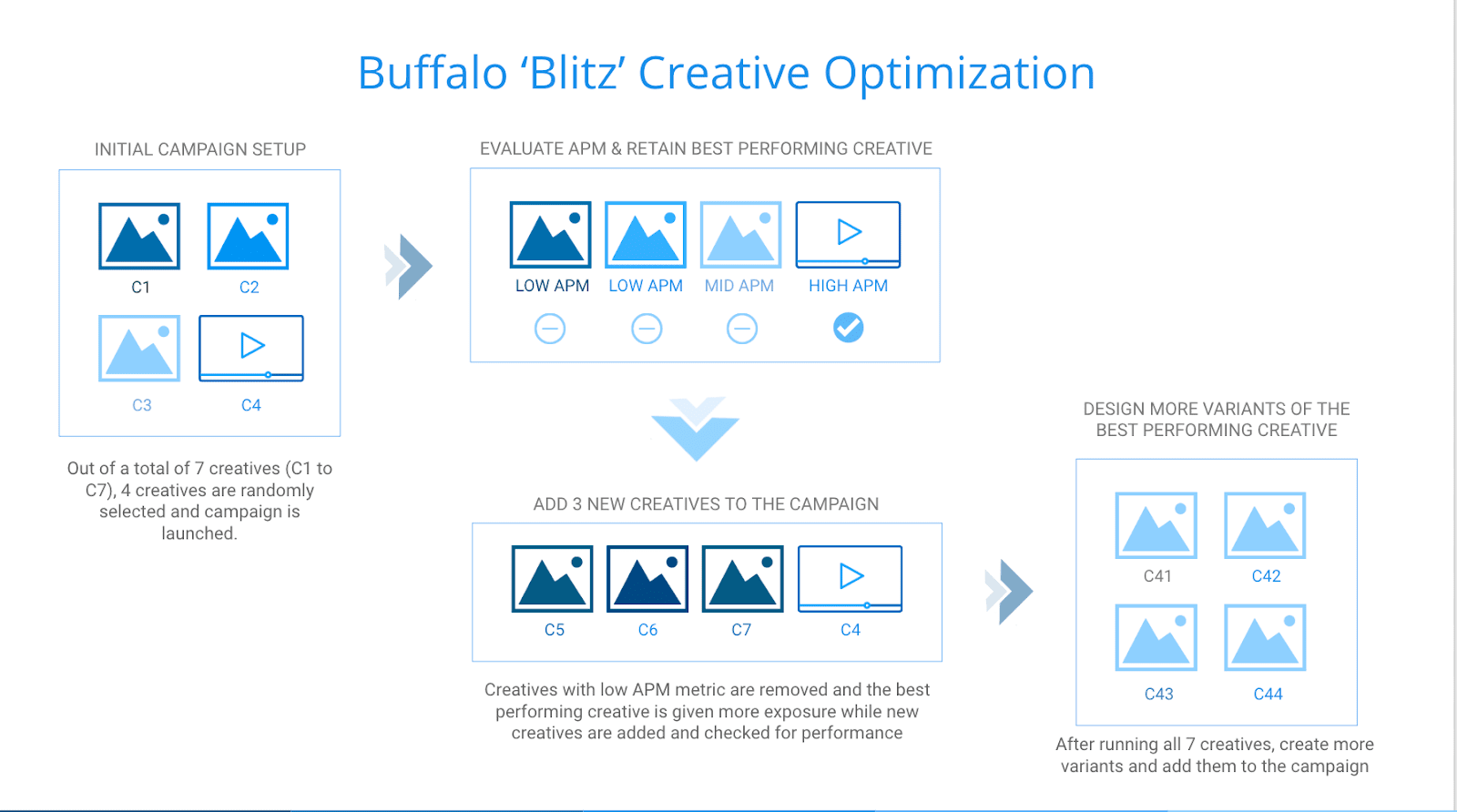

Introducing the Buffalo ‘Blitz’ Optimization Technique

The above mentioned brute force technique can be adapted and tweaked to make it work for APM uplift goals.

The constraints on time, resource and budget can be overcome with a ‘divide and conquer’ approach that we refer to as the Buffalo Blitz.

Setup and Process

Requirement: At least four creatives are available.

- Select at random any four creatives.

- Launch a campaign with the selected creatives. Ensure each creative gets a certain minimum (and largely equal) exposure within a defined period to reach statistical significance. Note: Exposure can be defined in terms of impressions, clicks, installs, actions or a combination of these. For example, consider a rule such as this: 10,000 impressions or 500 clicks or 25 installs or five actions - whichever is earliest.

- Calculate the LTVM metric for each creative (take a rolling window of 14 days).

- Pause all other creatives except the best performing one (based on the LTVM metric). Essentially, retain the top performing creative and pause the rest.

- Add three new creatives selected at random to the campaign.

- Repeat step three.

The process never ends - keep testing. As each new variant goes live, test elements of your creative which contribute to maximum impact.

Repeatedly doing this process helps make sure that only the most engaging creative variant(s) are being served.

Advantages of Buffalo Blitz

This tiered approach helps to quickly determine a “winner” and iterate efficiently.

At the same time, it also mitigates any negative impact to upstream metrics such as sudden drops in conversion rate. It does this by efficiently allocating budgets for maximum APM uplift with minimal campaign changes (effort). This results in increased ROI.

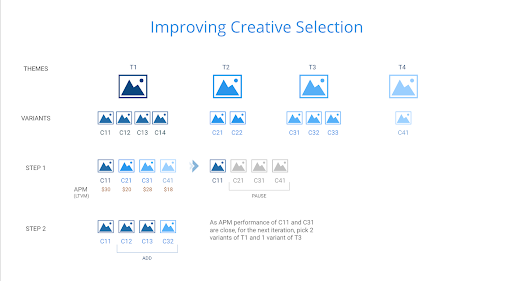

Further Optimizations on Buffalo Blitz

The ‘divide-and-conquer’ approach can be further efficiently implemented by a small tweak to the creative selection process.

First, group the creatives into themes: a distinct group of creatives based on a concept that is markedly different in messaging and visual from other themes. Each theme will only contain a set of creative variations under them.

Then, instead of selecting any four creatives, select at random four creatives in total across themes, ensuring at least one creative from each theme is included.

The reasoning behind this is based on the intuition that variance in the APM metric will be higher across themes than across creatives within a theme.

Also, when randomly selecting the next three creatives, preference is given to creatives from new themes. If none exist, then add the variants of the best performing theme.

It’s possible for variants of other themes to go untested which could have resulted in better performance. But this can easily be solved for by including those creatives where the variance between themes are comparable.

Things to be Wary Of

Certain types of creative themes such as those with offers, discounts or rewards tend to do better than creatives which do not carry any incentives.

Also, do not reject any creative ideas before testing. While the motivation is certainly to reduce costs, uncertainty and complexity, one needs to guard against such biases.

Harness the power of best-in-breed creatives with InMobi’s powerful suite of creative tools for producing and flighting rich media and HTML5 creatives to maximize ROI and lower operations costs; also, leverage deep-learning technology that predicts display ad performance before it runs.

About the Author

Praveen Rajaretnam has over a decade of experience in mobile marketing and growth marketing. He started his career as an engineer at a cybersecurity firm, working on automation and performance testing. Praveen also started a social commerce firm, running marketing and growth strategies there. He spends considerable time researching anti-fraud methodologies, attribution mechanisms and real-time bidding technologies.

Stay Up to Date

Register to our blog updates newsletter to receive the latest content in your inbox.